Apple's Illusion of Thinking Paper & Concept of AI Tool Half-Life

No matter how an "AI agent" is defined (and improved upon), the paper argues that complex reasoning will "collapse" - more research suggests AI tool usefulness could be measured with a half-life.

Have been asked a few times in past 2 weeks about the Illusion of Thinking paper from Apple (Shojaee et al., 2025) that exposed limitations of LLMs (not a popular topic among influencers, but the subject of my entire blog). I enjoyed the paper and its popularity, as the discussion seems completely lost on 98% of the bots who are influencers on social media. Many responses to it since, many angry and some legitimate.

Wait. What was this paper and why was it controversial?

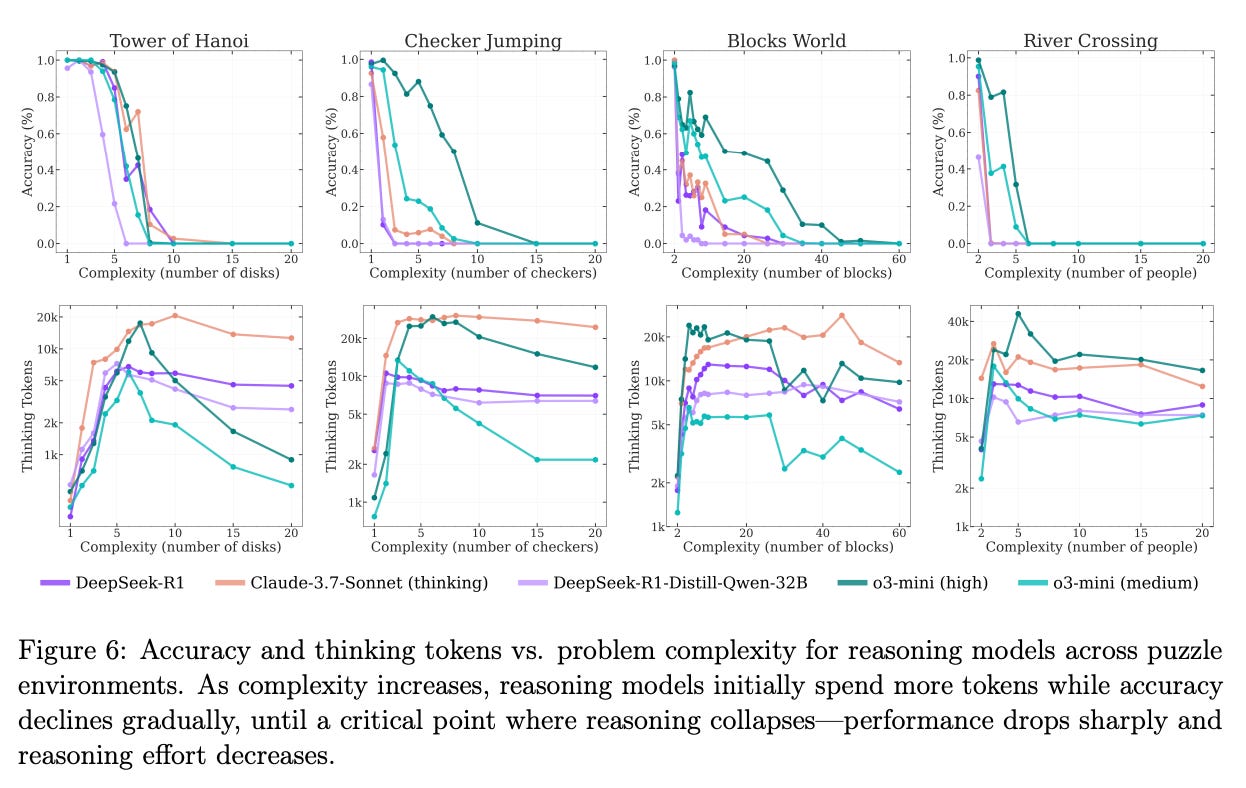

Apple's paper claimed Large Reasoning Models (LRMs) suffer "accuracy collapse" on complex puzzles, suggesting (correctly) fundamental reasoning limits, but the paper responses argued this reflects experimental flaws rather than cognitive failure.

Criticisms of the paper from others:

Token limits: Models truncated solutions exceeding context windows (e.g., Tower of Hanoi) 3 .

Impossible tasks: River Crossing puzzles included unsolvable instances, penalizing models unfairly 4.

Rigid evaluation: Automated scoring ignored partial solutions or strategic truncation 5.

My response to what criticisms imply will solve: limitations of context windows

Where the paper’s experimentation issues are valid, its critics most valid point is the first one - the context window limitations of each reasoning step that have the model go to the next step of the inference portion of the model system without all details of a solution inherent in the context windows. Will this be improved upon? Context windows definitely limit many potential use cases of AI tools, and are expanding over time, but inherent issues have been well-documented.

Let’s presume we can expand context windows to infinite limits, even with Gemini Pro's 1M-token recall 6, RAG systems reveal inherent brittleness:

Placement sensitivity: Performance plummets when "needles" are buried in mid-context 6.

Prompt fragility: Minor changes (e.g., "return relevant sentences") boosted Claude's accuracy from 27% to 98% 7.

This underscores agents' dependence on precise retrieval, not just raw context size, and therefore there is no known solution for issues to AI agents (all presented solutions - including o3 and other hyped reasoning models released in the past year - are still representations of inherent compounding error).

Then.. can we measure usefulness of agentic AI tools with a half-life?

Kwa et al. (2025) data shows AI agent success decays exponentially with task duration 9, which implies a half-life related to usefulness (again, not a popular topic among people who believe AI is taking control of the world). Key points:

Half-life metric: Task length at 50% success rate (e.g., Claude 3.7: 59 minutes) 9.

Scaling law: Every 7 months, achievable task duration doubles 9.

High-stakes barrier: T<sub>99%</sub> ≈ T<sub>50%</sub>/70, demanding years of progress for reliable long tasks

My summary

Apple’s paper had a correct take that current models excel at pattern matching, not generalized reasoning. Failures stem from context constraints and evaluation misalignment, which would always introduce more errors related being unable to fully understand every detail in large context windows, and lead to further compounding error. Each model use case could be valued as ROI according to a half-life (which I think happens currently).

References (some removed as I’d made this post more succinct):

1 The Illusion of Thinking (Shojaee et al.)